Reading temperature sensor in Rust using Raspberry Pi GPIO

Intro

While learning Rust one of the exercises I always wanted to try is GPIO and low-level device communication. Simple examples like configuring button handler or led blinking are not that exciting because they are not practical. Reading temperature and humidity, on the contrary, allows building something like a weather station or just simple graphs. While it will be much easier to use python with existing libraries. In my opinion, writing it in Rust assembles efficiency of C and readability and safety of Python.

Devices

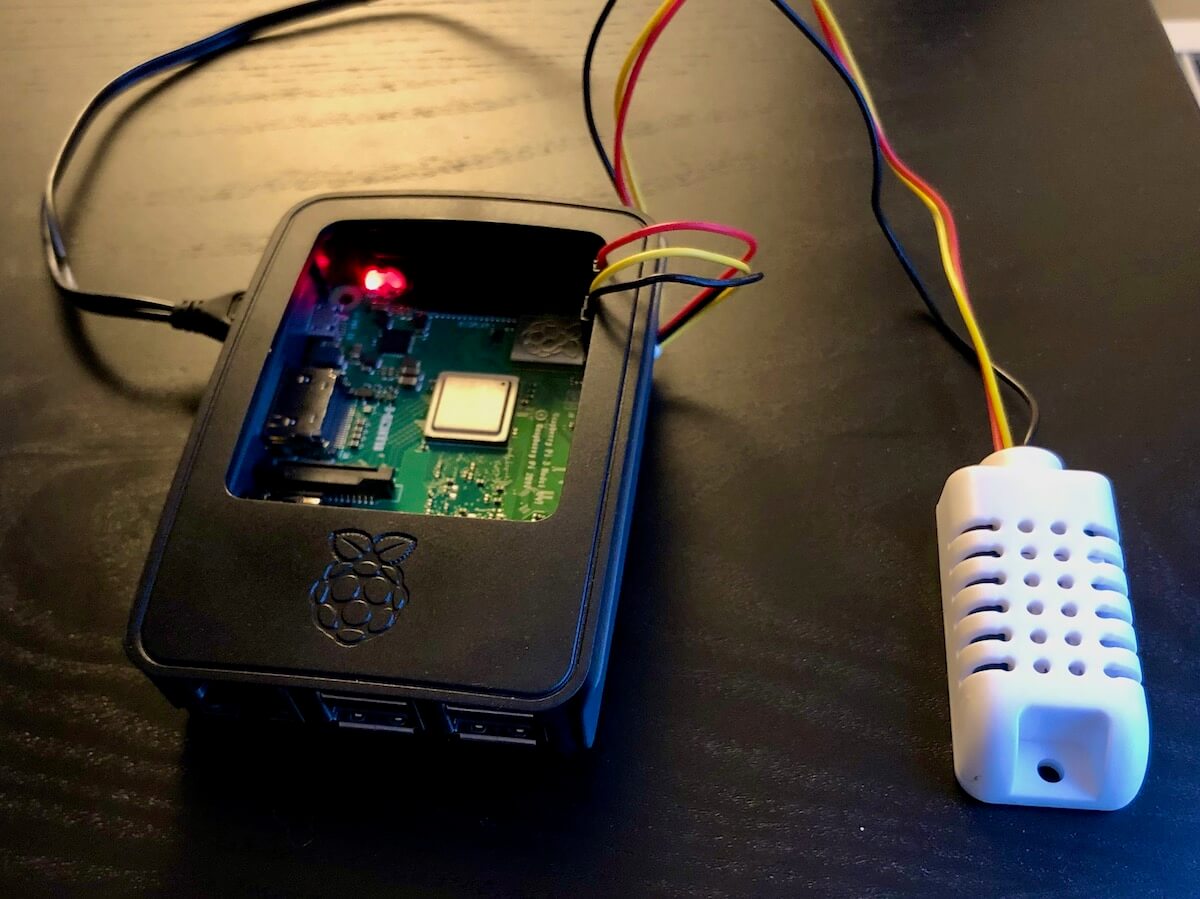

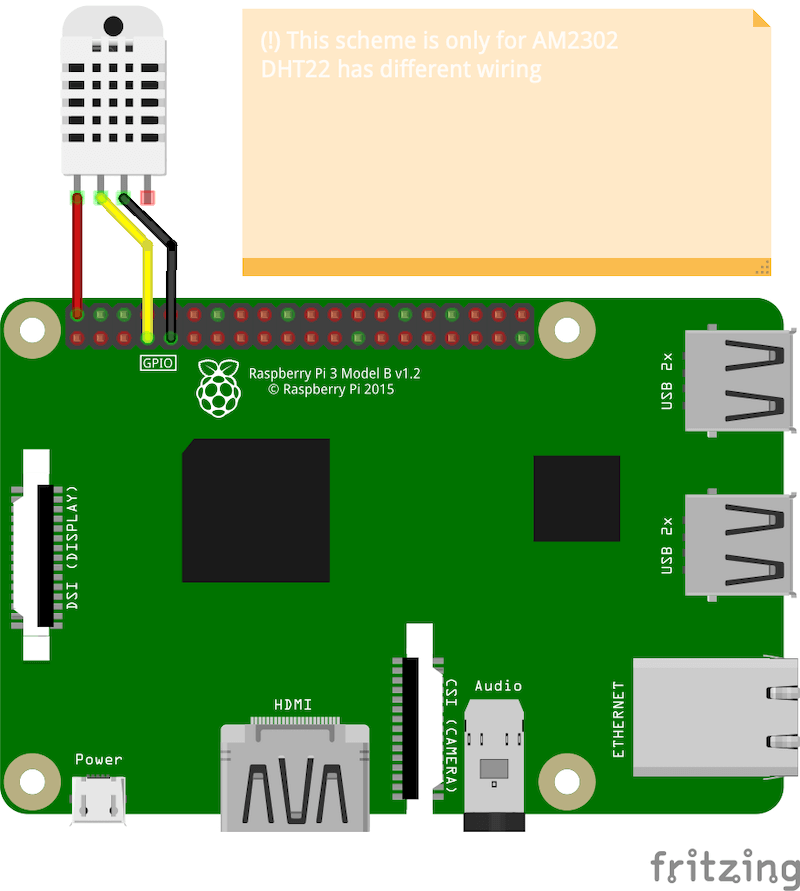

I use the AM2302 sensor, which is a wired version of DHT22 sensor and Raspberry Pi 3 Model B+, which is on the picture above.

Connection

Utility pinout which is part of python-gpiozero package, allows to get an actual number of the pin for a particular Raspberry Pi model,

here is part of the output for the Raspberry Pi 3 model:

3V3 (1) (2) 5V

GPIO2 (3) (4) 5V

GPIO3 (5) (6) GND

GPIO4 (7) (8) GPIO14

GND (9) (10) GPIO15- The red wire goes to

pin 25V. Most other examples use 3.3V pin, but I decided to use 5 to avoid low voltage issues. - Black wire goes to

pin 9, ground. Any other ground can be used. - Yellow wire goest to

pin 7, which refered as GPIO4, so4will be used in the code

Code

There are several options for working with GPIO interface in Rust:

I decided to use gpio-cdev package, as sysfs interface is going to be deprecated.

Prepare

First, adding a dependency to Cargo.toml:

[dependencies]

gpio-cdev = "0.2"Gpio-cdev has pretty clear and simple documentation so we can have this function to get a line object. As documentation describes line: “Lines are offset within gpiochip0”

use gpio_cdev::{Chip, Line};

fn get_line(gpio_number: u32) -> Line {

let mut chip = Chip::new("/dev/gpiochip0").unwrap();

chip.get_line(gpio_number).unwrap()

}gpio_number in my case is 4.

Requesting data

According to the sensor documentation to initialize data transfer from the device, it should be set the status to zero (pull down) for about 1 - 10 milliseconds.

use std::{thread, time};

use gpio_cdev::{Chip, LineRequestFlags, Line};

fn do_init(line: &Line) {

let output = line.request(

LineRequestFlags::OUTPUT,

HIGH,

"request-data").unwrap();

output.set_value(0).unwrap();

thread::sleep(time::Duration::from_millis(3));

}Reading Data, Part 1

There are 2 options reading the data,

- Pulling data manually using line request and

.get_value()method - Subscribing to events from the line, using

.events()method of the line

Let’s try first approach as it is straight forward.

In following example we will read state for changes during 10 seconds

let contact_time = time::Duration::from_secs(10);

let input = line.request(

LineRequestFlags::INPUT,

HIGH,

"read-data").unwrap();

let mut last_state = input.get_value().unwrap();

let start = time::Instant::now();

while start.elapsed() < contact_time {

let new_state = input.get_value().unwrap();

if new_state != last_state {

let timestamp = time::Instant::now();

println!("Change of state {:?} => {:?}", last_state, new_state);

last_state = new_state;

}

}But how to check if all these changes are valid data, or if initiliazing works properly?

I prefer to stop here, and start from other end: write decoding data part, and then get back to reading and check if data is correct.

Decoding data

Here is example from documentation on how data is repesented:

DATA=16 bits RH data+16 bits Temperaturedata+8 bits check-sum

Example: MCU has received 40 bits data from AM2302 as

0000 0010 1000 1100 0000 0001 0101 1111 1110 1110

16 bits RH data 16 bits T data check sum

Here we convert 16 bits RH data from binary system to the decimal system,

0000 0010 1000 1100 → 652

Binary system Decimal system

RH=652/10=65.2%RH

Here we convert 16 bits T data from binary system to the decimal system,

0000 0001 0101 1111 → 351

Binary system Decimal system

T=351/10=35.1°C

When the highest bit of temperature is 1,

it means the temperature is below 0 degrees Celsius.

Example: 1000 0000 0110 0101, T= minus 10.1°C

16 bits T data

Sum = 0000 0010 + 1000 1100 + 0000 0001 + 0101 1111 = 1110 1110

Check-sum = the last 8 bits of Sum = 1110 1110So let’s express it in Rust!

I define Reading struct that will hold actual temperature and humidity

#[derive(Debug, PartialEq)]

pub struct Reading {

temperature: f32,

humidity: f32,

}Data we receive is not always correct, so errors will happen, this enum will represent it:

#[derive(Debug, PartialEq)]

pub enum CreationError {

// Wrong number of input bites, should be 40

WrongBitsCount,

// Something wrong with conversion to bytes

MalformedData,

// Parity Bit Validation Failed

ParityBitMismatch,

// Value is outside of specification

OutOfSpecValue,

}And to build reading we will need to define a constructor, that will take vector of bytes and converts it to Reading.

It checks:

- That there are only 40 bits

- That vector contains only 1s and 0s

- That checksum is correct

- That actual values are valid by specification

It also does all the necessary conversions.

Note, that convert function that converts vector of 1s and 0s to integer is described in my another article

Also, specificaion does not mention it explicitly, but check sum is counted with overflow.

impl Reading {

pub fn from_binary_vector(data: &[u8]) -> Result<Self, CreationError> {

if data.len() != 40 {

return Err(CreationError::WrongBitsCount);

}

let bytes: Result<Vec<u8>, ConversionError> = data.chunks(8)

.map(|chunk| -> Result<u8, ConversionError> { convert(chunk) })

.collect();

let bytes = match bytes {

Ok(this_bytes) => this_bytes,

Err(_e) => return Err(CreationError::MalformedData),

};

let check_sum: u8 = bytes[..4].iter()

.fold(0 as u8, |result, &value| result.overflowing_add(value).0);

if check_sum != bytes[4] {

return Err(CreationError::ParityBitMismatch);

}

let raw_humidity: u16 = (bytes[0] as u16) * 256 + bytes[1] as u16;

let raw_temperature: i16 = if bytes[2] >= 128 {

bytes[3] as i16 * -1

} else {

(bytes[2] as i16) * 256 + bytes[3] as i16

};

let humidity: f32 = raw_humidity as f32 / 10.0;

let temperature: f32 = raw_temperature as f32 / 10.0;

if temperature > 81.0 || temperature < -41.0 {

return Err(CreationError::OutOfSpecValue);

}

if humidity < 0.0 || humidity > 99.9 {

return Err(CreationError::OutOfSpecValue);

}

Ok(Reading { temperature, humidity })

}

}And it is always a good idea to write tests for it. Here I will only show couple of them, the rest is available in the repository

#[cfg(test)]

mod tests {

use super::*;

#[test]

fn wrong_parity_bit() {

let result = Reading::from_binary_vector(

&vec![

0, 0, 0, 0, 0, 0, 1, 0, // humidity high

1, 0, 0, 1, 0, 0, 1, 0, // humidity low

0, 0, 0, 0, 0, 0, 0, 1, // temperature high

0, 0, 0, 0, 1, 1, 0, 1, // temperature low

1, 0, 1, 1, 0, 0, 1, 0, // parity

]

);

assert_eq!(result, Err(CreationError::ParityBitMismatch));

}

#[test]

fn correct_reading() {

let result = Reading::from_binary_vector(

&vec![

0, 0, 0, 0, 0, 0, 1, 0, // humidity high

1, 0, 0, 1, 0, 0, 1, 0, // humidity low

0, 0, 0, 0, 0, 0, 0, 1, // temperature high

0, 0, 0, 0, 1, 1, 0, 1, // temperature low

1, 0, 1, 0, 0, 0, 1, 0, // parity

]

);

let expected_reading = Reading {

temperature: 26.9,

humidity: 65.8,

};

assert_eq!(result, Ok(expected_reading));

}

}Having all this allows to get back to reading actual data and check if it is correct.

Reading Data, Part 2

How do I convert all these state changes to a vector of bits?

According to documentation, actual values are represented by the amount of time signal was in 1 state, where

0for 26-28 microseconds1for 70 microseconds

But another document by aosong.com I’ve found shows this table:

| Parameter | Min | Typical | Max | Unit |

|---|---|---|---|---|

| Signal “0” high time | 22 | 26 | 30 | μS |

| Signal “1” high time | 68 | 70 | 75 | μS |

So considering error in measurements, I will take 35 milliseconds as the cutoff between 1 and 0.

Also, I want to separate reading data and parsing it, so no CPU cycles will be spent during receiving on other than reading data from device.

“Raw data” will be represented with Event structure, which will have timestamp and type:

#[derive(Debug, PartialEq)]

enum EvenType {

RisingEdge,

FallingEdge,

}

#[derive(Debug)]

struct Event {

timestamp: time::Instant,

event_type: EvenType,

}

impl Event {

pub fn new(timestamp: time::Instant, event_type: EvenType) -> Self {

Event { timestamp, event_type }

}

}And to convert vector of events to vector 1 or 0s pretty handy .windows iterator method will be used

fn events_to_data(events: &[Event]) -> Vec<u8> {

events

.windows(2)

.map(|pair| {

let prev = pair.get(0).unwrap();

let next = pair.get(1).unwrap();

match next.event_type {

EvenType::FallingEdge => Some(next.timestamp - prev.timestamp),

EvenType::RisingEdge => None,

}

})

.filter(|&d| d.is_some())

.map(|elapsed| {

if elapsed.unwrap().as_micros() > 35 { 1 } else { 0 }

}).collect()

}Read events function should be just record timestamp of the changes and change type:

fn read_events(line: &Line, events: &mut Vec<Event>, contact_time: time::Duration) {

let input = line.request(

LineRequestFlags::INPUT,

HIGH,

"read-data").unwrap();

let mut last_state = input.get_value().unwrap();

let start = time::Instant::now();

while start.elapsed() < contact_time {

let new_state = input.get_value().unwrap();

if new_state != last_state {

let timestamp = time::Instant::now();

let event_type = if last_state == LOW && new_state == HIGH {

EvenType::RisingEdge

} else {

EvenType::FallingEdge

};

events.push(Event::new(timestamp, event_type));

if events.len() >= 83 {

break;

}

last_state = new_state;

}

}

}Events vector is created outside of this function, because request to device is done before, spending time creating new vector can lead to loss of events.

Combining it all together and testing

in main.rs I will declare 2 function, try_read, which will get bits we were able to pull from the device and check they can be converted to the data.:

fn try_read(gpio_number: u32) -> Option<Reading> {

let mut final_result = None;

let all_data = push_pull(gpio_number);

if all_data.len() < 40 {

println!("Saad, read not enough data");

return final_result;

}

for data in all_data.windows(40) {

let result = Reading::from_binary_vector(&data);

match result {

Ok(reading) => {

final_result = Some(reading);

break;

}

Err(e) => {

println!("Error: {:?}", e)

}

}

}

final_result

} And main function simply tries to read every 5 seconds:

fn main() {

let gpio_number = 4; // GPIO4 (7)

let sleep_time = time::Duration::from_secs(5);

for _ in 1..30 {

println!("Sleeping for another {:?}, to be sure that device is ready", sleep_time);

thread::sleep(sleep_time);

match try_read(gpio_number) {

Some(reading) => println!("Reading: {:?}", reading),

None => println!("Unable to get the data"),

}

}

}Output will look like this:

Compiling rust_gpio v0.1.0 (/home/pi/workspace/rust_gpio)

Finished dev [unoptimized + debuginfo] target(s) in 4.02s

Running `target/debug/rust_gpio`

Sleeping for another 5s, to be sure that device is ready

Error: ParityBitMismatch

Error: ParityBitMismatch

Unable to get the data

Sleeping for another 5s, to be sure that device is ready

Error: ParityBitMismatch

Reading: Reading { temperature: 23.2, humidity: 37.7 }

Sleeping for another 5s, to be sure that device is ready

Error: OutOfSpecValue

Reading: Reading { temperature: 23.2, humidity: 68.2 }

Sleeping for another 5s, to be sure that device is ready

Error: ParityBitMismatchAs you might see, it is not always to possible to read the data, and often first attemp is ParityBitMisMatch

Note on a different approach

Pulling doesn’t look like the most performant way of reading data, and subscribing to events using .events() method suppose to be more efficient, but I was unable to get enough transitions in the callback. I haven’t investigated, why this is happening.

Conclusion

Making binary data more readable simplifies experimentation a lot!

Only tests can make “some rust code” to become “rust code you trust”.

Couple improvement can be done, such as:

- Skipping 1st bit if there are 41 bits was received, as it is signal bit

- Allocating space for a vector of events upfront

- Making pin to be a command-line argument

- More extensive testing

The full example is available in GitHub Repository citizen-stig/gpio-am2302-rs